Kafka Asynchronous consumers

Background

Kafka use in various projects is increasing by the day. Look at a google trends report : https://trends.google.com/trends/explore?date=today%205-y&q=%2Fm%2F0zmynvd

In parallel, the use of Asynchronous Non-Blocking code is also increasing by the day.

Please read these stories to know more:

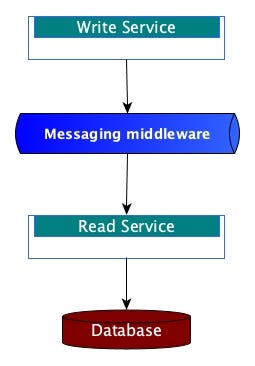

Messaging Middleware

As seen above messaging helps decouple two parts of a service based system. The Write Service writes to the messaging middleware and continues its work and the read service reads from the messaging middleware at it’s own pace and does it’s work which in this case happens to be write to a database.

Examples of messaging middleware are RabbitMQ(AMQP in general), ActiveMQ, Kafka, etc..

Kafka Middleware

As seen above Kafka helps decouple two parts of a service based system. The Write Service writes to the Kafka and continues its work and the read service reads from the Kafka at it’s own pace and does it’s work which in this case happens to be write to a database.

So far so good.

Kafka Details

Partitions

For simplicity, assume we have a Kafka Producer writing messages to a topic, which has n partitions. Each Partition is read by a consumer. Each consumer writes messages to a database.

Messages are guaranteed to be ordered within a partition.

Synchronous consumer (Messages 1 to 8 in one partition)

As seen above the consumer reads each message and stores it into the database. It is a synchronous process and thus each read offset can be committed only after the message is written to the database.

Asynchronous consumer (Messages 1 to 8 in one partition, six asyncronous read processes in technologies like kotlin or akka)

As seen above the consumer reads each message and stores it into the database.

It is an asynchronous process.

Each read offset can still be committed only after the message is written to the database, just like the previous case.

However because of the asynchronous nature of the process, process 6 could complete before process 1.

If process 6 commits offset 8, Kafka assumes that the consumer has read all the messages 1 to 8.

If all the other messages succeed writing to the database, we have no issue.

However, even if any message(1 to 7) fails writing to the database, still as far as Kafka is concerned those messages(1 to 7) were successfully consumed.

Verdict

Do we really need asynchronous consumer processing when reading from each Kafka Partition ?

If the answer is yes, then, the default Auto-Commit may work for happy paths, but we do need to work out as design that will deal with failures.

Look at each of the use cases for the project in detail.

For example consider these two use cases

- A text event service where we need to make sure all data is saved

- A price tick event service where the event contains all data, and if a tick is missed then there is no problem.

In the first use case we do not want to consume a single kafka partition in an asyncronous way.

In the second use case we do could consume a single kafka partition in an asyncronous way, with a retry in the process itself, when the write to database fails, so as to not loose data. And in this case if the consumer vm crashes, data could be lost but we are om with data loss as per our use case.

In both use cases muliple consumers on multiple partitions will work fine.